One of the first feature articles I wrote for Reason was about sex robots. This was 2015, and both legacy and social media had cyclical freak-outs about the havoc that sex robots would supposedly wreak. By sex robots, I—and everyone else at the time—meant anthropomorphic robots that were able to be physically intimate with humans and perhaps romantic, too. The gist of my piece was basically calm down—sex robots as people are imagining them don’t actually exist, they won’t for a while, and even if they eventually do, it’s going to be OK.

You are reading Sex & Tech, from Elizabeth Nolan Brown. Get more of Elizabeth’s sex, tech, bodily autonomy, law, and online culture coverage.

Now sex robots are here. We’re seeing the rise of artificially intelligent “chatbot” companions and they are capable of both romance and naughty talk, from the G-rated to the pornographic.

This week, OpenAI founder Sam Altman even announced that an upcoming version of ChatGPT would not only have more “personality” but also engage in “erotica” with “verified adults.”

There are already all sorts of dedicated platforms for “AI girlfriends” and “sex fantasy chatbots.” Meta has been under fire for allowing its chatbots to engage in “romantic role play” that can get graphic even when those chatting say that they are teens. And Elon Musk’s AI bot, Grok, can go into “sexy mode.”

These are not the sort of sex robots everyone was panicking about a decade ago. They’re not embodied sex machines.

But that hasn’t stopped a lot of hand-wringing about what this all will mean—and bad bills based on the premise that sexual or romantic relationships with chatbots must be stopped.

An Ohio lawmaker, state Rep. Thad Claggett (R-Licking County), has introduced legislation to ban marriages between humans and AI chatbots. “No AI system shall be recognized as a spouse, domestic partner, or hold any personal legal status analogous to marriage or union with a human or another AI system,” states House Bill 469.

This seems to be that nobody…absolutely no one…meme come to life. I mean, sure, people can say they’re “married to” a chatbot, but no government authority is out there recognizing these partnerships as legal unions, nor are they about to. This preemptive ban on human-chatbot marriages smacks of attention-seeking (if we’re being charitable) or brain worms brought on by indulging in a little too much tech doomerism.

(The Ohio bill also states that AI systems can’t own property or manage a corporation and says AI developers are legally required to prevent or mitigate harm. So perhaps the real point of the AI marriage ban is to distract from the more substantial elements of Claggett’s proposal.)

Meanwhile, Sen. Josh Hawley (R-Mo.)—who never met a new tech panic he couldn’t embrace—is reportedly drafting a bill that “would ban AI companions for minors,” per Axios.

It’s unclear exactly what this means—whether the bill would totally prohibit minors from talking to AI chatbots designed to act friend-like or merely bar them from chatbots that can turn up the heat. But from Hawley’s comments on X, it sounds like the former. “AI chatbots are literally killing kids,” he posted. “Time to ban chatbot companions for minors and require the chatbots to disclose to everyone they’re not professionals, not counselors, not human.”

At least part of the bill—dubbed the Guidelines for User Verification and Responsible Dialogue (GUARD) Act—is aimed at creating “new crimes for companies which knowingly make available to minors AI companions that solicit or produce sexual content,” according to a memo viewed by Axios.

Look, I don’t think that AI chatbots should be designed to get explicit with people under age 18. But I also came of age in the AOL chatroom era. I don’t think teenagers engaging in a little sexually explicit chatting is anything new or anything to panic about. And, honestly, testing boundaries and exploring sexual themes with an AI chatbot is probably less problematic or dangerous for teenagers than sexting with school peers who may not keep the conversations private or internet strangers who could turn out to be sexual predators or extortionists.

In any event, making it a federal crime for AI chatbots to produce any “sexual content” while chatting with minors risks doing more harm than good. It could bar chatbots from providing any sort of sexual health information to minors or offering any sort of education or advice related to sexuality. And it would, of course, require anyone accessing any sort of AI chatbot that’s allowed to talk about sex at all to prove their identity.

As for adults, I imagine that most people who decide to talk dirty to Grok or ChatGPT are just engaging in a little bit of harmless sexual fantasy, not all that dissimilar to calling a phone sex line a few decades ago.

I am not worried that chatbot relationships will, on a wide scale, overtake human romances, or even that sexy chatbots will put human sex workers out of work.

In the long run, most people want at least an illusion of mutuality—the idea that their amorousness and desires are shared by their companion.

This is why sex workers so often describe their work as being as much about performing romance, lust reciprocity, and emotional intimacy as it is about literal intercourse. This is why the most successful webcam girls and OnlyFans models aren’t always the most beautiful or hottest women on the platform but those who seem the most “real” or are the most skilled at offering a personalized touch to fans. If it were just about getting off, none of these bells and whistles would be necessary.

Of course, everyone’s different. Not everyone places the same value on emotional connection. And among those that do, the level of illusion of humanness provided by AI chatbots may be perfectly sufficient for some. This minority of users may decide that sexy chatbots aren’t just a sometimes-fun distraction but as good as—or better than—a human companion. But it will be a small minority, and regardless, heavy on people incapable or undesiring of sustaining a real relationship. If it provides some measure of comfort and helps abate loneliness for this cohort, we should just let them be.

In the 2007 book Sex + Love with Robots, artificial intelligence specialist David Levy writes that he’s not worried about human-to-human sex and relationships becoming obsolete. “What I am convinced of,” he writes, “is that robot sex will become the only sexual outlet for a few sectors of the population—the misfits, the very shy, the very sexually inadequate and uneducable—and that for some other sectors of the population robot sex will vary between something to be indulged in occasionally…to an activity that supplements one’s regular sex life.”

Here’s what I wrote about sex robots back in 2015, and I think it still stands whether we’re talking humanoid robots or AI chatbots:

On the margins, sexbots could dissuade some individuals from pursuing human-to-human intimacy and relationships, just as pornography, sex toys, and everything from alcohol to work are also sometimes used to avoid attachments. But it has become clear through countless bouts of cultural and technological change that, for the most part, people see no substitute for knowing and loving another person. To predict sexbots as even moderately widespread stand-ins for sex and relationships reveals a not-insignificant misanthropism.

That isn’t to say that individual use of sex robots is misanthropic. For many men and women, they will remain ancillary to interhuman relationships, more like sex toys than humanity surrogates. For a subset, social robots may provide opportunities for companionship and sexual satisfaction that otherwise wouldn’t exist. When this occurs, we’d all do well to remember that having faith in human institutions and relationships means not panicking over new possibilities. Staying conscientious but open-mined toward the use of social robots, including sex robots, can only enhance our understanding of what it means to be—and to fall for—human beings.

Follow-up: It never ends with porn…

Back in August, I wrote about Alabama’s “Material Harmful to Minors tax,” a 10 percent tax levied on the state’s porn producers and peddlers. The tax—part of a larger measure that also requires web porn platforms to verify visitor ages—is now in effect. The first payments are due on October 20.

Having successfully targeted online porn, the architect of the tax—Rep. Ben Robbins (R-Sylacauga)—is, of course, now going after online platforms more broadly.

“Robbins said he plans to bring more legislation next year addressing technology and algorithms that drive addictive behavior on devices and expects other lawmakers to have bills,” reports AL.com. “There’s going to be a lot of attention on how are we protecting children in this rapidly evolving world,” Robbins said. “We’ve made a stab with pornography, but I think there’s more that needs to be done.”

Follow-up: Newsom vetoes algorithmic “hate speech” bill

Good news: California Gov. Gavin Newsom has vetoed S.B. 771, a bill targeting social media that I covered in Monday’s newsletter.

SB 771 would have revised the state’s civil rights law so that social media platforms could be punished for users’ “hate speech” (the First Amendment and Section 230 be damned).

In declining to sign, Newsom said he was concerned that the bill was “premature.”

“Our first step should be to determine if, and to what extent, existing civil rights laws are sufficient to address violations perpetrated through algorithms,” said Newsom in a statement.

More Sex & Tech News

• Astral Codex Ten just awarded a $5,000 grant to Aaron Silverbook “for approximately five thousand novels about AI going well,” in order to attempt to train AI not to kill us all. “Critics claim that since AI absorbs text as training data and then predicts its completion, talking about dangerous AI too much might ‘hyperstition’ it into existence,” Scott Alexander explains:

Along with the rest of the AI Futures Project, I wrote a skeptical blog post, which ended by asking – if this were true, it would be great, right? You could just write a few thousand books about AI behaving well, and alignment would be solved! At the time, I thought I was joking. Enter Aaron, who you may remember from his previous adventures in mad dental science. He and a cofounder have been working on an ‘AI fiction publishing house’ that considers itself state-of-the-art in producing slightly-less-sloplike AI slop than usual. They offered to literally produce several thousand book-length stories about AI behaving well and ushering in utopia, on the off chance that this helps. Our grant will pay for compute. We’re still working on how to get this included in training corpuses. He would appreciate any plot ideas you could give him to use as prompts.

• The Colorado Supreme Court says a teenager who used AI to create fake nude images of his classmates in 2023 cannot be held liable for creating child pornography. “Colorado law prior to 2025 did not criminalize, as a means of sexually exploiting a child, the use of artificial intelligence to generate nude images depicting real children,” notes Colorado Politics. “The legislature acted this year to clearly establish a crime for someone to have or share fake, yet ‘highly realistic,’ images of children that are explicitly sexual.”

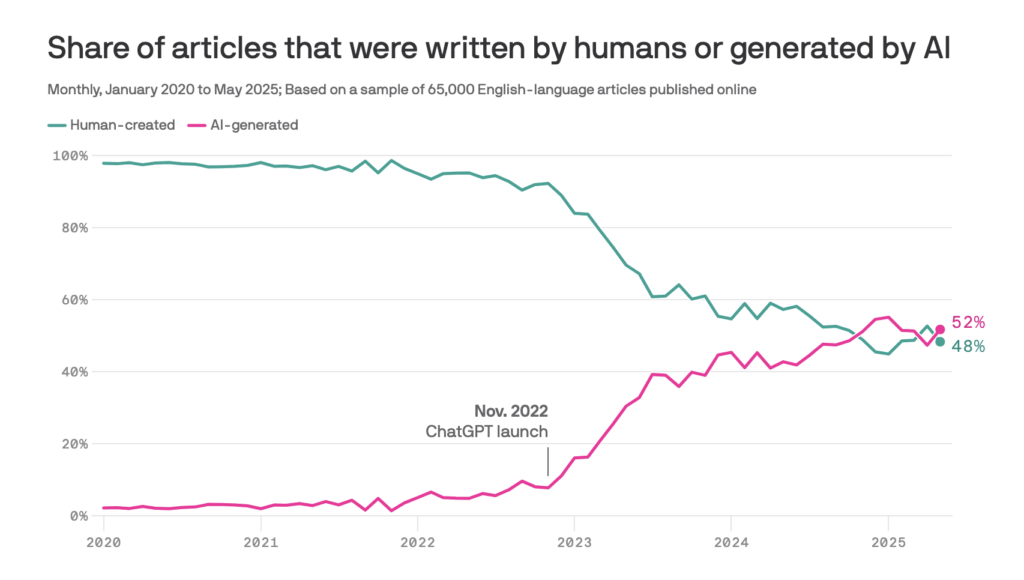

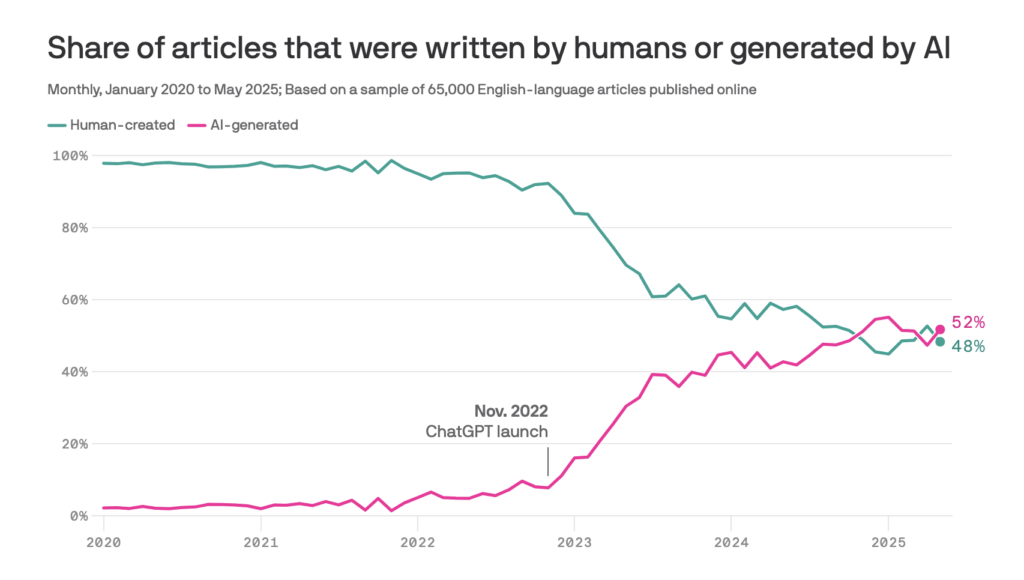

• An unsettling chart, via Axios:

• A Wyoming librarian will get $700,000 as part of a settlement with the state’s Campbell County after the Campbell County Public Library System Board of Trustees fired her for opposing local conservatives’ efforts to have all books with sexual themes removed from the youth section of public libraries. Meanwhile, Wyoming conservatives are considering legislation that would make it easier for people to sue libraries over such content.

• Meanwhile, in Arkansas, “one of the most extreme book censorship laws in recent memory” would “allow jailing librarians and booksellers for keeping materials on their shelves that fall under the statute’s broad definition of ‘harmful to minors,'” notes the Foundation for Individual Rights and Expression (FIRE). “The state’s Act 372 not only makes it possible for librarians to be jailed for providing teenagers with Romeo and Juliet, but also allows anyone to ‘challenge the appropriateness’ of any book in a library.”

• In Kentucky, another attempt to use deceptive trade practices laws to go after tech companies that platform content that politicians don’t like.

• “Ofcom, the UK’s Online Safety Act regulator, has fined online message board 4chan £20,000 ($26,680) for failing to protect children from harmful content,” reports The Register. 4chan’s violation? Failing to respond to Ofcom emails seeking copies of an illegal content risk assessment and its revenue, which Ofcom is seeking as part of an investigation into whether 4chan is violating the U.K.’s dreadful Online Safety Act.

Today’s Image