California is trying to punish social media platforms for aiding and abetting the First Amendment. Senate Bill 771, currently awaiting Democratic Gov. Gavin Newsom’s signature, would “impose meaningful consequences on social media platforms” that allow users to publish “hate speech,” per a legislative analysis from the state’s Senate Judiciary Committee.

One little problem: this is America, and most speech—no matter how hateful or offensive—is protected by the First Amendment.

You are reading Sex & Tech, from Elizabeth Nolan Brown. Get more of Elizabeth’s sex, tech, bodily autonomy, law, and online culture coverage.

California lawmakers are trying to get around this by pretending that S.B. 771 doesn’t actually punish companies for platforming potentially offensive speech; it merely makes them liable for aiding and abetting violations of civil rights law or conspiring to do so. “The purpose of this act is not to regulate speech or viewpoint but to clarify that social media platforms, like all other businesses, may not knowingly use their systems to promote, facilitate, or contribute to conduct that violates state civil rights laws,” the bill states.

Pointing to state laws against sexual harassment, threats and intimidation, and interference with the exercise of rights, it states that a social media platform that violates those statutes “through its algorithms” or “aids, abets, acts in concert, or conspires in a violation of any of those sections” can be held jointly liable for damages with whoever is actually doing the harassing and so on, punishable by millions of dollars in civic penalties.

Guilt by Algorithm

There is currently no tech company exception to California civil rights laws, of course. Any social media company that directly engages in violations is as liable as any individual or any other sort of company would be.

But that’s not what S.B. 771 is about. California lawmakers aren’t merely seeking to close some weird loophole that lets social media platforms engage in threats and harassment.

No, they are trying to hold platforms responsible for the speech of their users—in direct contradiction of Section 230 of the federal Communications Decency Act (CDA) and of Supreme Court precedent when it comes to this sort of thing.

In the 2023 case Twitter, Inc. v. Taamneh, the Court held that federal liability for aiding and abetting criminal or tortious antics “generally requires some special steps on the defendant’s part to aid the illegal actions,” as law professor Eugene Volkoh noted in September. “In particular, the Court rejected an aiding and abetting claim based on Twitter’s knowingly hosting ISIS material and its algorithm supposedly promoting it, because Twitter didn’t give ISIS any special treatment.”

To be guilty of aiding and abetting, one must engage in “conscious, voluntary, and culpable participation in another’s wrongdoing,” the Court wrote.

“California law also requires knowledge, intent, and active assistance to be liable for aiding,” notes First Amendment lawyer Ari Cohn, lead tech policy counsel with the Foundation for Individual Rights and Expression (FIRE). But since “nobody really thinks the platforms have designed their algorithms to facilitate civil rights violations,” satisfying this element is never going to work under existing law.

In other words, social media platforms—and their algorithms—aren’t actually guilty of aiding and abetting civil rights violations as it’s written. So California lawmakers are trying to rewrite the law. And the rewrite would basically make “being a social media company” a violation.

How so? Well, first, S.B. 771 would “create a new form of liability — recklessly aiding and abetting — for when platforms know there’s a serious risk of harm and choose to ignore it,” notes Cohn. Creating a “reckless aiding and abetting” standard could render social platforms guilty of violating the law even when they don’t specifically know that a particular post contains illegal content.

The bill also says that “a platform shall be deemed to have actual knowledge of the operations of its own algorithms, including how and under what circumstances its algorithms deliver content to some users but not to others.”

Again, this is designed to ensure that a company wouldn’t need to know that a specific post violated some California law to have knowingly aided and abetted an illegal act. Merely having an algorithm that promotes particular content would be enough.

The algorithm bit “is just another way of saying that every platform knows there’s a chance users will be exposed to harmful content,” writes Cohn. “All that’s left is for users to show that a platform consciously ignored that risk.” And “that will be trivially easy. Here’s the argument: the platform knew of the risk and still deployed the algorithm instead of trying to make it ‘safer.’ Soon, social media platforms will be liable solely for using an ‘unsafe’ algorithm, even if they were entirely unaware of the offending content, let alone have any reason to think it’s unlawful.”

Section 230? What Section 230?

One problem here: the First Amendment.

“The First Amendment requires that any liability for distributing speech must require the distributor to have knowledge of the expression’s nature and character,” points out Cohn. “Otherwise, nobody”—online or off—”would be able to distribute expression they haven’t inspected.” For this reason, writes Cohn, what S.B. 771 seeks to accomplish is inherently unconstitutional.

Another problem here: Section 230 of the CDA, which prohibits interactive computer services from being treated as the speaker of third party content.

“I’m pretty sure that such liability will be precluded by [Section 230],” Volokh writes of S.B. 771.

The California Legislature is trying to get around Section 230’s bar on treating platforms as the speakers of user content by saying that “deploying an algorithm that relays content to users may be considered to be an act of the platform independent from the message of the content relayed.” It’s saying, essentially, that an algorithm is conduct, not speech.

But that is not novel. For more than a decade, people have been trying to get around Section 230 by arguing that various facets of social media and app function are not actually mechanisms for spreading speech but “product design” or some other non-speech element. And courts have pretty routinely rejected these arguments, because they are pretty routinely nonsensical. The things being objected to as harmful are user posts—a.k.a., content and a.k.a., speech. Algorithms and most of these “product design features” merely help relay speech; they’re not harm-causing in and of themselves.

“Because all social media content is relayed by algorithm, [S.B. 771] would effectively nullify Section 230 by imposing liability on all content,” notes Cohn. “California cannot evade federal law by waving a magic wand and declaring the thing Section 230 protects to be something else.”

But for some reason, state lawmakers, attorneys general, and the private lawyers bringing bad civil suits keep thinking that if they make this argument enough times, it’s got to fly. It’s what I think of as the “algorithms are magic” school of legal thinking. If we just throw the word algorithms around enough times, down is up and up is down, and typical free speech precedents don’t apply!

Turning Social Platforms Into Widespread Censors

Of course, if this bill becomes law, it would go way beyond punishing platforms for the relatively rare speech that rises to the level of violating California civil rights law. With such massive penalties at stake for every violation, S.B. 771 would surely induce companies to suppress all sorts of speech that is not illegal and is, in fact, protected by the First Amendment. Why take chances?

Clearly a platform can’t monitor every individual user post and determine conclusively whether it violates sexual harassment statutes. Enter an algorithm that quashes any kind of come on, any use of sexually degrading language, or perhaps any mention of sexuality.

Clearly a platform can’t monitor every individual user post and determine conclusively whether it violates laws against discriminatory intimidation or threats of violence. Enter an algorithm that suppresses metaphorical and hyperbolic speech (“kill all Steelers fans”), discussions about threats, sentiments about sex, gender, race, and religion that may be offensive, or use inflammatory language, and so on.

“Obviously, platforms are going to have a difficult time knowing if any given post might later be alleged to have violated a civil rights law. So to avoid the risk of huge penalties, they will simply suppress any content (and user) that is hateful or controversial — even when it is fully protected by the First Amendment,” writes Cohn.

Newsom has through Monday to decide whether or not to sign S.B. 771. If he doesn’t act on the bill, it will become law without his signature.

Follow-Up: Ohio’s Anti-Porn Law

Ohio Attorney General Dave Yost is threatening to sue adult websites that aren’t verifying the ages of all visitors. “A review of 20 top pornography websites ordered by…Yost revealed that only one is complying with Ohio’s recently enacted age-verification law,” the attorney general’s office states. “Yost is sending Notice of Violation letters to the companies behind noncompliant pornography websites, warning of legal action if they fail to bring their platforms into compliance within 45 days.”

But here’s the thing: a lot of websites that host porn fall under the federal definition of “interactive computer services.” And as I noted last week, Ohio’s age verification law, which took effect September 30, exempts interactive computer services from the mandate to collect government-issued identification or use transactional data to verify that potential porn-watchers are at least 18 years old.

This is a pretty non-ambiguous exemption for websites like Pornhub and many other top porn sites, where users can post videos. But apparently, Ohio is just going to act like the law doesn’t say what it does—setting this up for a big legal showdown in which Yost seems certain to lose.

More Sex & Tech News

• Section 230: The Nation doesn’t get it.

• Speaking of Section 230: The Supreme Court is considering whether to take up a case involving this law and the gay hookup app Grindr. “The plaintiff in the case, John Doe, is a minor who went onto the Grindr app – despite its adults-only policy – and claims to have been sexually assaulted by four men over four days whom he met through it,” per the SCOTUSblog‘s summary:

He sued the platform for defective design, failure to warn, and facilitating sex trafficking, but the U.S. Court of Appeals for the 9th Circuit ordered his claims dismissed under Section 230’s immunity shield as a publisher of third-party content.

Doe urges the justices to clarify whether CDA Section 230 immunizes apps from liability for their product flaws and activities like geolocation extraction, algorithmic recommendations, and lax age verification that allegedly enable child exploitation. Grindr’s opposition insists that Doe’s claims boil down to third-party content moderation and neutral tools, with no real division among the courts of appeals warranting review.

This gets back to the topic of today’s main section: people trying to argue that tech functions that facilitate third-party speech clearly are actually something else so as to get around Section 230.

• “Everything is television,” writes Derek Thompson on Substack. “Social media has evolved from text to photo to video to streams of text, photo, and video, and finally, it seems to have reached a kind of settled end state, in which TikTok and Meta are trying to become the same thing: a screen showing hours and hours of video made by people we don’t know. Social media has turned into television….the most successful podcasts these days are all becoming YouTube shows….Even AI wants to be television.

• Checking in on Chinese social media:

A new type of entertainment called ‘vertical drama’ has emerged: shows filmed in vertical format to suit smartphone users. Each episode lasts between two and five minutes, and after a few teaser episodes you have to pay to watch the rest. The dramas are usually taken from popular web novels. A title can be produced in less than a week, and the requirements for the actors are basic: they just have to look good on camera. Nuance and subtlety are the preserve of artistic films; verticals need as many flips and twists as possible. Production is often sloppy. If a line is deemed problematic by viewers, the voice is simply muffled, without any attempt to cut or reshoot. The stories are sensational. One that has got lots of viewers excited is the supposedly forthcoming Trump Falls in Love with Me, a White House Janitor. According to an industry report, vertical drama viewers now number 696 million, including almost 70 per cent of all internet users in China. Last year the vertical market was worth 50.5 billion yuan [$7 billion], surpassing movie box office revenue for the first time. It is projected to reach 85.65 billion yuan by 2027. As one critic put it, the rapid pace and intense conflicts of verticals allow viewers to experience the ‘tension-anticipation-release-satisfaction’ cycle in a matter of minutes.

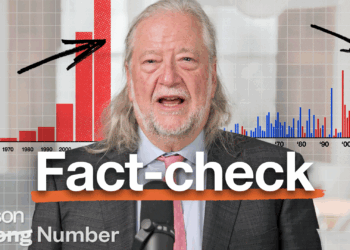

Today’s Image