huge rise in deepfake images since 2019 (Image: Getty Images/iStockphoto)

A quarter of British people don’t think there is anything wrong with people sharing so-called deepfake porn photos – despite the number of indecent images rocketing by 1,780% in the last five years. A shock new police-commissioned survey has found that one in four people feel there is nothing wrong with, or feel neutral about, creating and sharing sexual deepfakes.

The worrying survey, published today, explored the public’s attitudes towards deepfakes and more specifically, those that are sexual or intimate in nature and disproportionately target women and girls. Of the 1,700 respondents from the nationally representative survey led by Crest Advisory, almost one in six people admitted to having created some kind of deepfake image before or would do so in the future. This number peaked at a third of people aged 25-34 years old. Younger people were also more likely to find it morally acceptable to create or share non-consensual sexual or intimate deepfakes compared to older people.

READ MORE: Brazen burglar duped police into believing she was friend of homeowner

READ MORE: Thug who raped woman after she refused sex with another man arrested in Spain

The survey also found that three in five people are either very or somewhat worried about being a victim of a deepfake.

Social media was the most common platform where people viewed deepfakes.

The survey was commissioned by the Office of the Police Chief Scientific Advisor to help inform the next steps of the police response to tackle online violence against women and girls.

Policing is working in tandem with the Home Office, academics and industry to find solutions to help detect deepfakes online.

Intimate image abuse, including non-consensual sexual deepfakes, is vastly under-reported, with data from the Revenge Porn Helpline showing that only 4% of people who reported their abuse to the helpline also reported to the police.

AI is helping drive the crime (Image: AFP via Getty Images)

Police are appealing to victims to report intimate image abuse, as chiefs and other agencies voice growing concern that online sexual abuse towards women and girls is rising exponentially.

A new project led by the Revenge Porn Helpline, the National Centre for VAWG and Public Protection (NCVPP) and Digital Public Contact aims to improve the reporting and investigative process to encourage victims to come forward.

This includes police exploring the use of ‘image hashing’, a process that allows police and prosecutors to investigate a crime using a description of the image, rather than sharing the image itself. This means that a victim could have the option to limit who sees the image and avoid the distress of having the image shown in court.

Police and the Revenge Porn Helpline are also working with the Foreign, Commonwealth and Development Office to ensure international borders don’t hinder activity to take down imagery and tackle perpetrators.

Detective Chief Superintendent Claire Hammond from the National Centre for VAWG and Public Protection, said: “Sharing intimate images of someone without their consent, whether they are real images or not, is deeply violating.

“The rise of AI technology is accelerating the epidemic of violence against women and girls across the world. Technology companies are complicit in this abuse and have made creating and sharing abusive material as simple as clicking a button, and they have to act now to stop it.

“However, taking away the technology is only part of the solution. Until we address the deeply ingrained drivers of misogyny and harmful attitudes towards women and girls across society, we will not make progress.

“If someone has shared or threatened to share intimate images of you without your consent, please come forward. This is a serious crime, and we will support you. No one should suffer in silence or shame.”

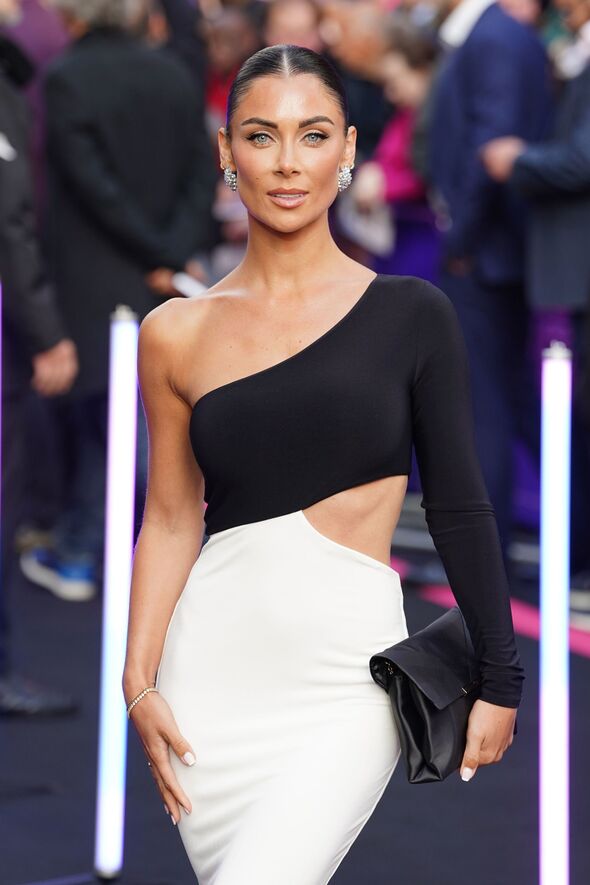

Cally Jane Beech attending the world premiere of the Ballerina, at Cineworld Leicester Square, Londo (Image: PA)

Cally-Jane Beech, award-winning activist and influencer, has been campaigning for better protection for victims of deepfake abuse. She said: “We live in very worrying times; the futures of our daughters (and sons) are at stake if we don’t start to take decisive action in the digital space soon.

“It’s an encouraging start to hear about the changes being made to the reporting system with regards to deepfakes, and I truly hope the additional training and guidance enable victims to feel supported from the moment they pick up the phone – but the conversations need to begin earlier. At home. Parent to child.

“We are looking at a whole generation of kids who grew up with no safeguards, laws or rules in place about this, and now seeing the dark ripple effect of that freedom.

“Stopping this starts at home. Education and open conversation need to be reinforced every day if we ever stand a chance of stamping this out.”